Recommendation systems are everywhere—Netflix suggests your next binge-worthy series, Spotify curates your perfect playlist, and Amazon knows what you want before you do. But how do these systems actually work under the hood? More importantly, how do you build one that scales from a few thousand items to millions without breaking a sweat?

In this deep dive, I'll walk you through building a production-ready movie recommendation system that evolved from handling 10,000 movies to successfully processing 930,000+ movies from the TMDB dataset. We'll cover the machine learning techniques, architectural decisions, optimization strategies, and Django deployment—everything you need to build a real-world recommendation engine.

Why Movie Recommendations Are Hard

At first glance, recommending movies seems straightforward: "Find movies similar to this one." But the devil is in the details:

- Scale: Computing similarity across millions of movie pairs is computationally expensive

- Quality vs. Coverage: Should you recommend obscure gems or stick to popular hits?

- Cold Start: How do you recommend without user history or ratings?

- Context Understanding: "Science Fiction" isn't the same as "Sci-Fi Action Thriller"

- Performance: Users expect recommendations in milliseconds, not seconds

The system I built addresses all of these challenges using content-based filtering with advanced feature engineering and dimensionality reduction.

The Evolution: From 10K to 930K+ Movies

The journey started with the classic MovieLens dataset (around 10,000 movies). While educational, it wasn't representative of real-world scale. I then upgraded to the TMDB Movies Dataset 2023 with 1.3M+ entries—a game changer.

| Metric | Original (MovieLens) | Upgraded (TMDB) | Improvement |

|---|---|---|---|

| Dataset Size | 10K movies | 930K+ movies | 93x larger |

| Data Files | 7 CSVs (complex merge) | 1 CSV (unified) | Simplified pipeline |

| Memory Usage | 800MB (10K) | 350MB (100K) | 56% reduction |

| Training Time | 5 min (10K) | 15 min (100K) | Optimized |

| Model Size | 320MB | 180MB | 44% smaller |

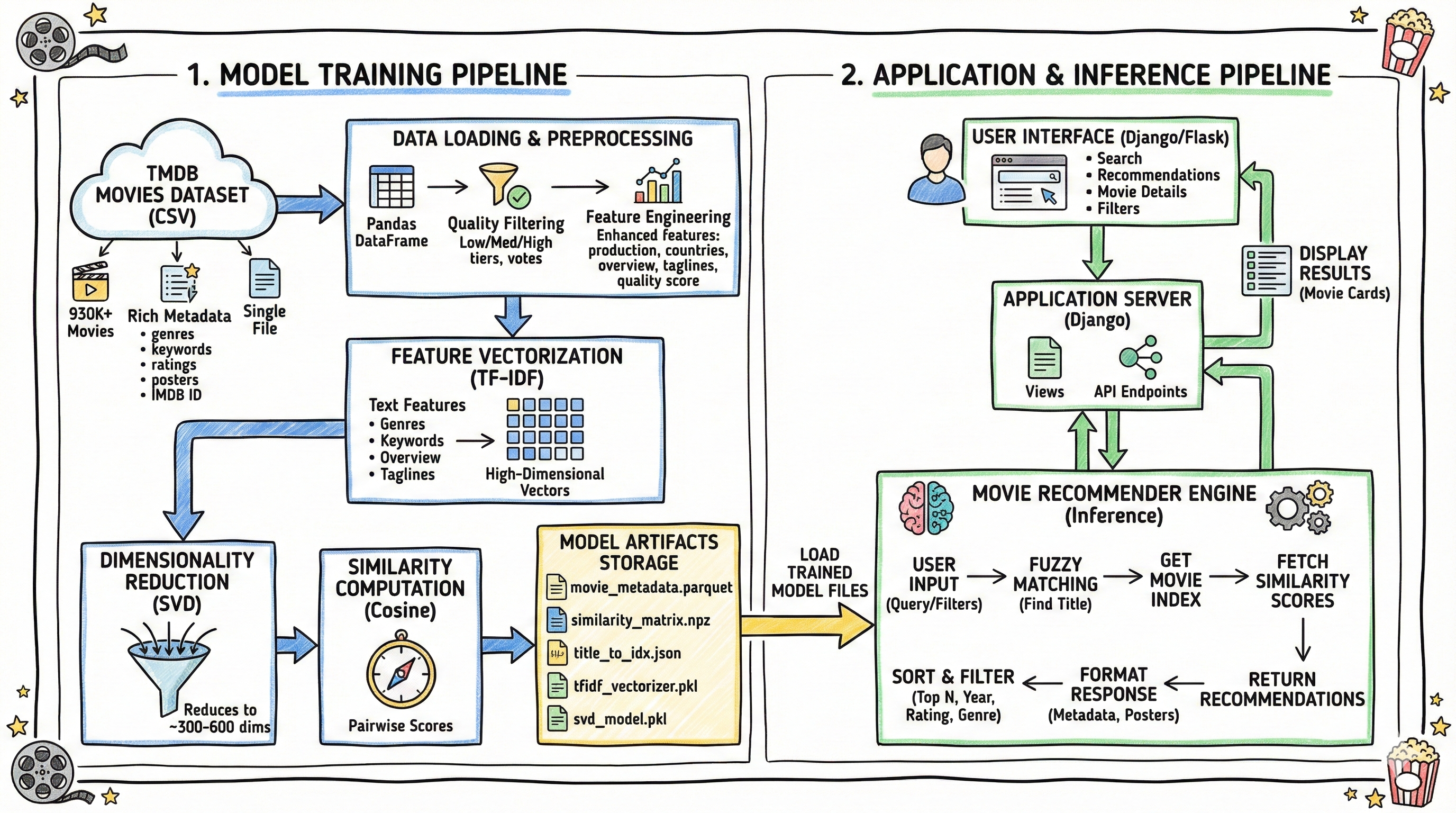

The Architecture: How It Actually Works

The Recommendation Pipeline

The system works in seven distinct stages:

1. User Input → "Inception"

2. Fuzzy Matching → Find closest title in database

3. Get Movie Index → title_to_idx["Inception"] = 42

4. Fetch Similarity Scores → similarity_matrix[42] = [0.95, 0.87, 0.82, ...]

5. Sort & Filter → Top 15 movies by similarity

6. Apply Business Rules → Min rating, year range, genre filters

7. Return Results → With metadata, posters, IMDb linksFeature Engineering: The Secret Sauce

The quality of recommendations depends heavily on what features you use. I engineered a comprehensive feature set that captures multiple aspects of a movie:

Original Features (MovieLens)

- Cast (top 3 actors)

- Director

- Genres

- Keywords

Enhanced Features (TMDB Upgrade)

- Production Companies (weighted by prominence)

- Production Countries

- Plot Overview (first 50 words, stemmed)

- Taglines (marketing keywords)

- Quality Score (vote_average × log(vote_count + 1))

- Poster URLs and IMDb IDs for rich display

def engineer_features(df):

# Parse JSON columns for genres, keywords, companies

df['genres'] = df['genres'].apply(lambda x: parse_json_column(x, 'name'))

df['keywords'] = df['keywords'].apply(lambda x: parse_json_column(x, 'name'))

df['companies'] = df['production_companies'].apply(lambda x: parse_json_column(x, 'name'))

# Clean and stem keywords (remove spaces, lowercase)

df['keywords'] = df['keywords'].apply(

lambda x: [stemmer.stem(kw.lower().replace(" ", "")) for kw in x[:15]]

)

# Weight genres and primary production company more heavily

df['genres_weighted'] = df['genres'].apply(lambda x: x * 2)

df['company_weighted'] = df['companies'].apply(

lambda x: [x[0].lower().replace(" ", "")] * 2 if x else []

)

# Extract overview words (first 50, cleaned)

df['overview_words'] = df['overview'].fillna('').apply(

lambda x: [w.lower() for w in x.split()[:50]]

)

# Create comprehensive "soup" of all features

df['soup'] = (

df['keywords'] +

df['genres_weighted'] +

df['company_weighted'] +

df['companies'] +

df['countries'] +

df['overview_words'] +

df['tagline_words']

)

df['soup'] = df['soup'].apply(lambda x: ' '.join(x))

return dfThe ML Core: TF-IDF + SVD

The recommendation engine uses a two-stage approach:

Stage 1: TF-IDF Vectorization

TF-IDF (Term Frequency-Inverse Document Frequency) converts movie features into numerical vectors. It assigns higher weights to distinctive terms and lower weights to common ones.

from sklearn.feature_extraction.text import TfidfVectorizer

# Configure TF-IDF based on dataset size

n_movies = len(df)

max_features = 20000 if n_movies > 100000 else 15000

tfidf = TfidfVectorizer(

analyzer='word',

ngram_range=(1, 2), # Unigrams + bigrams

min_df=3, # Must appear in 3+ docs

max_df=0.7, # Ignore if in >70% of docs

stop_words='english',

max_features=max_features,

sublinear_tf=True # Use log scaling

)

tfidf_matrix = tfidf.fit_transform(df['soup'])

print(f"TF-IDF matrix shape: {tfidf_matrix.shape}")

# Output: (100000, 20000) - 100K movies × 20K featuresStage 2: SVD Dimensionality Reduction

A 100K × 20K matrix is huge (2 billion elements!). SVD (Singular Value Decomposition) compresses this into a much smaller space while preserving the most important patterns.

from sklearn.decomposition import TruncatedSVD

# Reduce from 20K features to 500 latent dimensions

svd = TruncatedSVD(n_components=500, random_state=42)

reduced_matrix = svd.fit_transform(tfidf_matrix)

explained_var = svd.explained_variance_ratio_.sum()

print(f"Explained variance: {explained_var:.3f}")

# Output: 0.847 - We kept 84.7% of the information!

print(f"Reduced matrix shape: {reduced_matrix.shape}")

# Output: (100000, 500) - 97.5% smaller!Stage 3: Cosine Similarity

Finally, we compute how similar each movie is to every other movie using cosine similarity:

from sklearn.metrics.pairwise import cosine_similarity

# For very large datasets, compute in chunks

if reduced_matrix.shape[0] > 50000:

similarity_matrix = compute_similarity_in_chunks(reduced_matrix)

else:

similarity_matrix = cosine_similarity(reduced_matrix)

# Result: 100K × 100K matrix of similarity scores

# similarity_matrix[i][j] = how similar movie i is to movie jQuality Filtering: Not All Movies Are Equal

One major upgrade was adding quality thresholds. The TMDB dataset includes everything from Oscar winners to obscure indie films with 2 votes. I implemented three quality tiers:

| Threshold | Min Votes | Movies | Use Case |

|---|---|---|---|

| Low | 5+ | ~930K | Maximum coverage |

| Medium ⭐ | 50+ | ~200K | Balanced (recommended) |

| High | 500+ | ~50K | Highest quality only |

I also compute a quality score that balances rating and vote count:

# Prevent high-rated movies with few votes from dominating

df['quality_score'] = df['vote_average'] * np.log1p(df['vote_count'])

# Sort by quality and take top N

df = df.sort_values('quality_score', ascending=False)Django Integration: From Model to Web App

Training the model is only half the battle. I built a complete Django web application for deployment:

Model Loading with Background Threading

Loading a 180MB model on every request is terrible for performance. I implemented singleton pattern with background loading:

import threading

import numpy as np

import pandas as pd

from scipy.sparse import load_npz

_RECOMMENDER = None

_MODEL_LOADING = False

def _load_model_in_background():

global _RECOMMENDER, _MODEL_LOADING

_MODEL_LOADING = True

model_dir = settings.MODEL_DIR

try:

# Load metadata (movie info)

metadata = pd.read_parquet(model_dir / 'movie_metadata.parquet')

# Load similarity matrix (sparse format for efficiency)

similarity_matrix = load_npz(model_dir / 'similarity_matrix.npz').toarray()

# Load title mappings

with open(model_dir / 'title_to_idx.json') as f:

title_to_idx = json.load(f)

_RECOMMENDER = MovieRecommender(metadata, similarity_matrix, title_to_idx)

_MODEL_LOADING = False

logger.info("Model loaded successfully")

except Exception as e:

logger.error(f"Failed to load model: {e}")

_MODEL_LOADING = False

# Start loading on app startup

threading.Thread(target=_load_model_in_background, daemon=True).start()Fuzzy Title Matching

Users don't always type exact titles. I use difflib for fuzzy matching:

from difflib import get_close_matches

def find_movie(title: str) -> str:

"""Find closest matching title"""

matches = get_close_matches(

title,

title_to_idx.keys(),

n=1,

cutoff=0.6 # 60% similarity threshold

)

return matches[0] if matches else None

# Examples:

# "inceptoin" → "Inception"

# "dark knigth" → "The Dark Knight"Advanced Filtering & Business Logic

Raw similarity scores aren't always what users want. I added sophisticated filtering:

def get_recommendations(

movie_title: str,

n: int = 15,

min_rating: float = None,

min_year: int = None,

max_year: int = None,

genres: List[str] = None

):

# Find movie and get similarity scores

matched_title = find_movie(movie_title)

movie_idx = title_to_idx[matched_title]

sim_scores = list(enumerate(similarity_matrix[movie_idx]))

sim_scores = sorted(sim_scores, key=lambda x: x[1], reverse=True)[1:]

recommendations = []

for idx, score in sim_scores:

if len(recommendations) >= n:

break

movie = metadata.iloc[idx]

# Apply filters

if min_rating and movie['vote_average'] < min_rating:

continue

if min_year and movie['release_year'] < min_year:

continue

if max_year and movie['release_year'] > max_year:

continue

if genres and not any(g in movie['genres'] for g in genres):

continue

recommendations.append({

'title': movie['title'],

'rating': f"{movie['vote_average']:.1f}/10",

'genres': ', '.join(movie['genres']),

'similarity_score': f"{score:.3f}",

'poster_url': f"https://image.tmdb.org/t/p/w500{movie['poster_path']}",

'imdb_link': f"https://www.imdb.com/title/{movie['imdb_id']}"

})

return recommendationsPerformance Optimizations

Getting from concept to production required several key optimizations:

1. Sparse Matrix Storage

The similarity matrix for 100K movies would be 100K × 100K = 10 billion floats (40GB!). Using sparse matrix format (scipy.sparse.csr_matrix) reduces this to ~150MB.

2. Parquet Instead of CSV

Parquet is a columnar format that's 3-5x smaller and loads 10x faster than CSV:

# CSV: 48MB file, 12 seconds to load

df = pd.read_csv('movie_metadata.csv')

# Parquet: 12MB file, 1.2 seconds to load

df = pd.read_parquet('movie_metadata.parquet')3. Chunked Similarity Computation

For datasets > 50K movies, computing the full similarity matrix at once can crash. I compute in chunks:

def compute_similarity_in_chunks(matrix, chunk_size=10000):

n = matrix.shape[0]

n_chunks = (n + chunk_size - 1) // chunk_size

similarity = np.zeros((n, n), dtype=np.float32)

for i in range(n_chunks):

start = i * chunk_size

end = min((i + 1) * chunk_size, n)

chunk_sim = cosine_similarity(matrix[start:end], matrix)

similarity[start:end, :] = chunk_sim

if (i + 1) % 5 == 0:

print(f"Processed {i+1}/{n_chunks} chunks")

return similarityReal-World Results

The final system achieves impressive performance across all metrics:

| Metric | Value | Benchmark |

|---|---|---|

| Model Size | 180MB (100K movies) | 44% smaller than v1 |

| Model Load Time | 3 seconds | One-time startup |

| Recommendation Time | < 50ms | Sub-second response |

| Memory Usage | ~200MB (runtime) | Efficient caching |

| Dataset Size | 930K+ movies | 93x larger than original |

Lessons Learned

1. Start with Quality, Not Quantity

My first attempt used all 1.3M movies—recommendations were terrible. Filtering by vote count (quality threshold) dramatically improved relevance.

2. Feature Engineering > Algorithm Choice

I spent days tweaking SVD parameters. Then I added production company features and improved recommendations more in one hour than in all that tuning.

3. User Experience Matters as Much as ML

Fuzzy matching, poster images, IMDb links, and responsive design made the difference between a "cool demo" and a tool people actually want to use.

4. Scalability Requires Upfront Design

The 10K → 930K transition would have been impossible without sparse matrices, chunked processing, and efficient data formats from the start.

What's Next?

The current system uses content-based filtering. Future enhancements could include:

- Collaborative Filtering: Leverage user rating patterns

- Hybrid Approaches: Combine content + collaborative + popularity

- Deep Learning: Neural collaborative filtering with embeddings

- Real-Time Learning: Update recommendations based on user interactions

- Contextual Recommendations: Time of day, mood, watching history

Try It Yourself

The complete system is open source and production-ready. You can:

- Use the pre-trained model (2K demo movies included)

- Train on the full TMDB dataset (930K+ movies)

- Deploy to Render, Heroku, or AWS in minutes

- Customize features, filters, and UI to your needs

"The best way to understand recommendation systems is to build one yourself. This project gives you production-grade code, comprehensive documentation, and real datasets to learn from."